Have you tried Docker yet? Is it what you expected?

I read with interest that someone has made containers cool again. I was mildly interested in LXC, when I realised that even small scale out ARM servers would benefit from some form of virtualisation, but that is a niche requirement. For the rest of us, mature hypervisors have really steamrolled container technology.

Yes, there is OpenVZ, / Parallels Virtuozzo, but that has mainly been limited to the traditional web hosting market as a more cost effective virtual private server platform. Often people avoid these VPS options due to less isolation with hosting neighbors, and a habit of providers overcommiting resources.

Enter Docker. The cool container technology. For a start, this is a tool that is aimed at Developers / DevOps, not infrastructure people. And, in my opinion, its not targeting what virtualisation is good at. Instead its targeting an age old battle between developers and dependencies.

From the website:

Docker is an open-source engine that automates the deployment of any application as a lightweight, portable, self-sufficient container that will run virtually anywhere.

Docker containers can encapsulate any payload, and will run consistently on and between virtually any server. The same container that a developer builds and tests on a laptop will run at scale, in production*, on VMs, bare-metal servers, OpenStack clusters, public instances, or combinations of the above.

Notice the clear goal is NOT to compete with traditional hypervisors. Simply it gives developers the tools to create a maintainable micro environment for their application, with versioning, that is portable between dev / test / prod / etc. It can be considered as a tool to make micro custom PAAS platforms. PAAS platforms that contain any dependencies / languages you want.

How does it work?

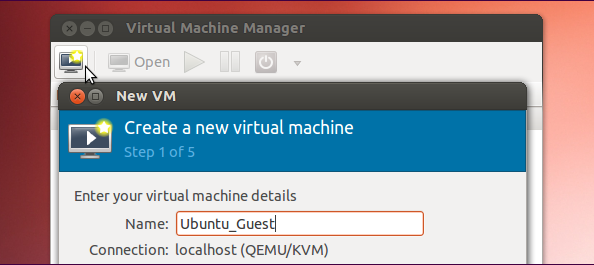

1. Have Ubuntu 14.04 (64bit, isn’t currently supported), as then you can just

sudo apt-get install docker.io

2. Have a look at the command options (after you check the docker service is running)

sudo docker.io

3. Search images, then install the Docker made ubuntu one anyway

sudo docker.io search something

sudo docker.io pull ubuntu

4. See how image layering with tags can give you different versions, space efficiently, as we now have many ubuntu images.

sudo docker.io images

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

ubuntu 13.10 5e019ab7bf6d 5 weeks ago 180 MB

ubuntu saucy 5e019ab7bf6d 5 weeks ago 180 MB

ubuntu 12.04 74fe38d11401 5 weeks ago 209.6 MB

ubuntu precise 74fe38d11401 5 weeks ago 209.6 MB

ubuntu 12.10 a7cf8ae4e998 6 weeks ago 171.3 MB

ubuntu quantal a7cf8ae4e998 6 weeks ago 171.3 MB

ubuntu 14.04 99ec81b80c55 6 weeks ago 266 MB

ubuntu latest 99ec81b80c55 6 weeks ago 266 MB

ubuntu trusty 99ec81b80c55 6 weeks ago 266 MB

ubuntu raring 316b678ddf48 6 weeks ago 169.4 MB

ubuntu 13.04 316b678ddf48 6 weeks ago 169.4 MB

ubuntu 10.04 3db9c44f4520 6 weeks ago 183 MB

ubuntu lucid 3db9c44f4520 6 weeks ago 183 MB

5. Get confused at the prospect of running containers with just one command….

sudo docker.io run -i -t ubuntu /bin/bash

Docker is designed to run ONE application per container. We just cheated by running a shell… But if you run top, you will see that it is the ONLY thing running in the container. When we exit the shell, our container will “stop”, and if we did start a service from the terminal, it is now very stopped.

6. Get confused at the difference between Images and Containers….

sudo docker.io run -i -t ubuntu /bin/bash

Seem logical to run this after we stopped our container? its not. Thats an image. Each time we run that, we get a new container. A clean version of the image with a writable layer above the source image. And because we are not specifying container names they will have cool names like “thirsty_wosniak” and “distracted_feynman”, as well as UUID’s. So lets try the below instead.

sudo docker.io ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6b3b35d35b7b eaef1fd5434f /bin/bash 2 days ago Exit Status 0 thirsty_wozniak

sudo docker.io start thirsty_wozniak

sudo docker.io attach thirsty_wozniak (if you want terminal access again)

to “detach” ctrl-p ctrl-q – this stops it from uh… stopping

cowsay "You should be running an application component, not a terminal"

7. Expose some kind of network service to the world

sudo docker.io commit thirsty_wozniak newubuntuimage sudo docker.io run -i -t -p 8080:8080 newubuntuimage /bin/bash

Hmmm… thats a bit annoying. We do need to plan what ports we want exposed to the outside world BEFORE creating our container (by running an image). So we create a new image based on our container, so we can make new containers based on our image, linked to a host port…

8. Start misusing things

After a bit of reading you will see we are already misusing things by running a shell, and commiting non-image data to images.My first real effort with docker is currently deploying an old python web app into a container, and I have put both Maria DB and the App in one container. That is the wrong way 🙂 But it has been great for my development efforts, and has stopped me from installing all kinds of dependencies directly on my dev system, and needing to use virt_env etc.

I only scratched the surface, and there are other exciting ways to deal with networking (including container linking, and a separate advanced tool known as pipework). Also you have the option to run a private image index, use a docker cloud private index, instead of using the public index. Also are included save / export functions to enable making images / containers portable as TAR archives.

Also bear in mind our image / containers use an AUFS layered file system, which adds some overhead, and has limitations, so we really want to isolate data to separate volumes not backed by the layered file system.

Also, infrastructure guys will have a bit more to worry about, as this technology really allows linux dev teams to utilize a single dev VM very highly by running MANY containers…

Docker is a great tool that by design encourages separating application components, and restricting network access, and lets us move our application from a notebook with vagrant, to a workstation with Linux, to an Amazon instance, to a VMware VM, keeping our application environment consistent, versioned and portable. I recommend trying Docker out and doing the online tutorial.

Enjoy!